We have written about how regular releases are good and that we practice what we preach on GOV.UK but we have never communicated what we actually do.

We have just over 50 applications that we release to GOV.UK, some more regularly than others. For example, Whitehall is released every day, whereas our release app is ironically released about once every six months.

Since launch in October 2012 we have released applications several thousand times to our production environment. All of our deployments are tracked by our release app that shows which version of an application has been deployed to each of our environments.

Our release process

As our development teams are building their applications, our Continuous Integration system creates a tag on every successful build of an application and merges master into a release branch. A successful build triggers a deploy of the release branch to our preview environment so that the team can check their changes in a production-like environment and to smoke out any major errors.

When a team has a build they want to deploy to production they do the following:

- Book a deploy slot (normally 30 minutes) in the release calendar giving details of the application, tag and expected changes.

- Check any notes against the application in the release app.

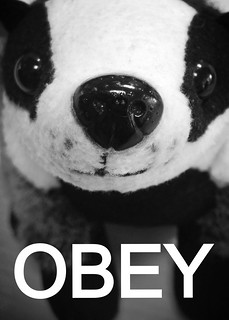

- Acquire the Badger of Deploy from 2nd line.

- Ensure the Badger of Deploy is not tagged with a note about the application being deployed, indicating that it is blocked from being released.

- Deploy to staging using the Jenkins job.

- Check Smokey passes.

- Check the new functionality works as you would expect.

- Take a look at any alerts and metrics, just to check you haven’t broken something.

- Repeat steps 5-8 in production.

- Return the Badger of Deploy to someone on 2nd line.

- Stay around in the office for a while, just in case something goes wrong.

© David Thompson

Our release principles

We have several principles that we work to when we do a release.

- Releases should be zero downtime

A release should not cause any downtime. For most of our apps this is achieved by using Unicorn, which starts up new processes which deal with new requests. The old requests are still processed by the previous release until they are finished. However, this means Upstart loses track of the process ID, so to solve this problem, we wrote Unicorn Herder which wraps Unicorn.

- Teams are responsible for deploying their own work

The team which wrote the code are in the best place to debug any issues which may occur during their deploy. Ownership of the entire process is important and encourages people to fix any pain points that they experience.

- One product team owns each release

We have several applications which are developed by several different teams. When releasing only one of them should own it. This is currently decided by whose code was merged to master first. This also encourages frequent releases so that teams are only releasing their own code.

- Deployments should be repeatable

To make deployment easy, it should be repeatable and reproducible. We achieve this by using a parameterised Jenkins job which runs Capistrano to deploy most of our applications.

The usual pattern for using Capistrano is that each host has a clone of the repository and will update that local copy on deploy. However, not all of our code is hosted publicly on GitHub; some is on internally hosted Git repositories for added security. To deal with this we use the rsync with remote cache deployment strategy for Capistrano so that only our Jenkins machine has to have a VPN connection to where our private repositories reside.

- Applications should be released regularly

Releasing regularly means that only small changes will be released which makes the job of debugging any issues after a deploy easier.

- Only one release at a time

Having only one thing going out to production helps to narrow the list of suspects if debugging is required. To ensure that only one release happens at a time we have The Badger of Deploy (a stuffed toy). The Badger of Deploy acts as a physical token that must be in the possession of anyone releasing an application.

- Normal releases should only happen during working hours

This is so should something go wrong with recently deployed code, a large number of the GOV.UK development team will be available to help with fixing it. Also it means that the person deploying is unlikely to go home leaving GOV.UK broken.

- Changes should be backwards compatible, except in exceptional circumstances

With approximately 50 applications if you make a breaking change you may end up having to deploy lots of applications which will make your life hard.

- Staging should be a maximum of one release ahead of production

We try to avoid releasing multiple applications at once to staging, preferring to release one application to staging, then production, before moving on to the next. This ensures that problems in any environment can be isolated to one application and one release, making them easier to debug.

If a release fails on staging, we roll back to the previous version unless we can fix it within the release slot.

- Deployments should be tested for regressions

With around 50 applications, a change to one may have effects elsewhere, therefore it is good to run tests after a deploy to check that the site is running as you would expect.

We achieve this by using Smokey which is a collection of behavior-driven development (BDD) tests written in Cucumber, to test the important functionality of GOV.UK. Different teams have contributed Smokey tests to make sure their applications are tested on every deploy.

Smokey tests are in addition to the more extensive unit testing that is tested by Continuous Integration - Smoke is a smoke test of user journeys through the website.

Smokey is triggered to run after every deploy and is also continuously run and monitored by our monitoring stack.

Because we're government, we also have some additional constraints around who has access to production. In general, production access requires a track record at GDS and security clearance.

© bob

If this sounds like a good place to work, take a look at Working for GDS - we're usually in search of talented people to come and join the team.

You can follow Bob on Twitter, sign up now for email updates from this blog or subscribe to the feed.

6 comments

Comment by Dan Hilton posted on

What is 2nd line? Do you mean 2nd line support or is that a particular team reference?

Comment by bob posted on

2nd Line does refer to a 2nd line support team which is made up of 2 developers and a Web Ops who are the first port of call for any issues during the day.

Comment by Tom posted on

Thanks for sharing.

I'd be interested to read more about how you integrate the multiple applications together, as well as how and when you decide to break off functionality into it's own application in the first place.

I had a quick search but couldn't find any posts on the topic. Has this information been shared anywhere?

Comment by Brad Wright posted on

Hi Tom,

The applications are joined by RESTful HTTP calls, although we've recently introduced a message queue as used by the new site mirroring process.

We've not really blogged about our approach to microservices and data management on GOV.UK, but we hope to soon. The very very short version is that the applications are divided broadly by user needs, eg devolved government publishers vs. in-house GDS content designers.

Comment by Geoff posted on

Thanks for sharing

I'd be interested to know what you do about database patching. If you're using unicorn, do you have to keep any databases consistently backwards compatible, because it is impossible to sync long-running database updates at the same time as swapping the code lines over. Also when you have huge amounts of servers, syncing all unicorns to serve new code at the same time might be problematic (but probably works nicely for small amounts of servers). Also where multiple apps have to all change in sync, you'd have to keep all the dependencies backwards compatible too. I think a better (but more expensive) solution to this is blue-green releasing with route switching - AND it allows testing of the "about-to-be-released" code in production prior to the release. I'd be interested to hear what you think about this alternative approach?

Comment by Brad Wright posted on

Hi Geoff,

Our general strategy with long-running migrations is to only perform schema changes at deploy time and run migrations as a separate task when it won't introduce issues on the site.

That said, the main part of our site is a MongoDB-backed HTTP API, so schema migrations on the core part of the site don't happen as often as you'd expect - and we're also expecting to more much more towards thin frontend clients and template layers in the public-facing parts of the site, which means they're even less important.

Blue/green is something we'd be more interested in if we frequently had to do expensive migrations - as I mention above it's not typically a problem we experience.