Web server configuration can be tricky to get right. Applications like nginx or apache are flexible and powerful. However this flexibility can result in configuration which is hard to understand, hard to maintain, and easy to accidentally break.

On the GOV.UK Verify beta service, we use nginx as our internet-facing web server. It performs a number of important tasks, including:

- terminating TLS and implementing Strict-Transport-Security

- proxying traffic to our frontend application

- routing analytics traffic to our internal Piwik server

- allowing us to put the site into a "maintenance mode" during disruptive upgrades

- serving robots.txt

This configuration has grown organically over time, but it has reached the point where we have multiple screens of if blocks to control what happens in each of a number of scenarios. It had reached a point where we were uncomfortable editing the configuration for fear of unintentionally breaking things. As long as we weren't changing the configuration, we were confident it would continue to work, but adding features to it or changing existing features felt risky.

So when we wanted to do a piece of work to serve a better error message when the web applications were not functioning, we had a low level of confidence we could implement this successfully. To gain confidence that we were doing the right thing and not breaking any existing behaviour, we decided that it was time to write tests for our nginx configuration.

Test Concept

Our test concept is very similar to the concept for GOV.UK's testing of CDN configuration. Our test spawns an instance of nginx with our configuration, and executes HTTP requests against it, making assertions about the responses that nginx gives. In some scenarios, nginx proxies the request through to our frontend web application; in these scenarios, we create a mock server under our control to play the role of the frontend web application without needing to start up a real application server.

Technology Choice

Our technology choice was largely driven by our existing infrastructure tests. We use puppet to manage our servers and have long been using rspec-puppet to run unit-level tests that we are creating the resources we expect. Our continuous integration server runs all of our rspec-puppet tests on each commit. Therefore, if we added an rspec test for nginx configuration, it could run in the same suite of tests as our puppet tests.

Using rspec also meant that we were working in Ruby. Our nginx configuration is generated by puppet, which uses ERB templates to generate config files. ERB is built-in to Ruby, so our rspec test could take the same template and generate configuration locally.

Making our nginx configuration testable

When we started, our nginx configuration had a number of features which made it difficult to test. Most of these were assumptions about the environment that nginx was running in; it was hardcoded to:

- listen on port 443, which requires root privileges

- find our frontend app at

https://frontend, assuming that this would resolve in DNS - serve static files from

/srv/www, which might not exist on a dev machine

In order to be able to start a local nginx for testing, we had to make these features configurable. This process is similar to the object-oriented process of injecting dependencies and configuration to classes to be able to substitute different values in tests.

In addition, our configuration file consisted of a single server block, which represents a single virtual host. In order to start, nginx needs a complete configuration file, including an http block and an events block. Our test code needed to create a minimal harness to contain the virtual host in order to get nginx started. Once we had done this, our code for launching an nginx server from a template looked like this:

def start_nginx

erb_filename = File.join(File.dirname(__FILE__), '../templates/edge_server.nginx.erb')

@url_for_frontend = "https://localhost:50193"

@listen_port = '8443'

@document_root = File.join(File.dirname(__FILE__), 'www')

vhost_renderer = ERB.new(File.read(erb_filename))

nginx_pid = nil

Tempfile.open('test_nginx_config') do |f|

f.write <<END

error_log #{File.expand_path('../../../../test_nginx_error.log',__FILE__)} emerg;

events { worker_connections 2000; }

http {

access_log off;

#{vhost_renderer.result(binding)}

}

END

f.flush

nginx_pid = Process.spawn("nginx","-c","#{f.path}","-g","daemon off; pid #{File.expand_path('../../../../nginx.pid',__FILE__)};")

wait_for_port_to_open(8443)

end

nginx_pid

end

Describing testcases

Once we had the building blocks in place to be able to launch an nginx instance from rspec, we started writing tests for existing behaviour. We chose these scenarios for their simplicity and for the potential to test-drive the behaviour change we wanted to introduce. Our scenarios were:

- When I request the root path

/, I should get redirected to GOV.UK, whether or not our frontend web application is running - Given our frontend application is running, when I request

/start, then I should get a proxied response from the frontend server - Given our frontend application is not running, when I request

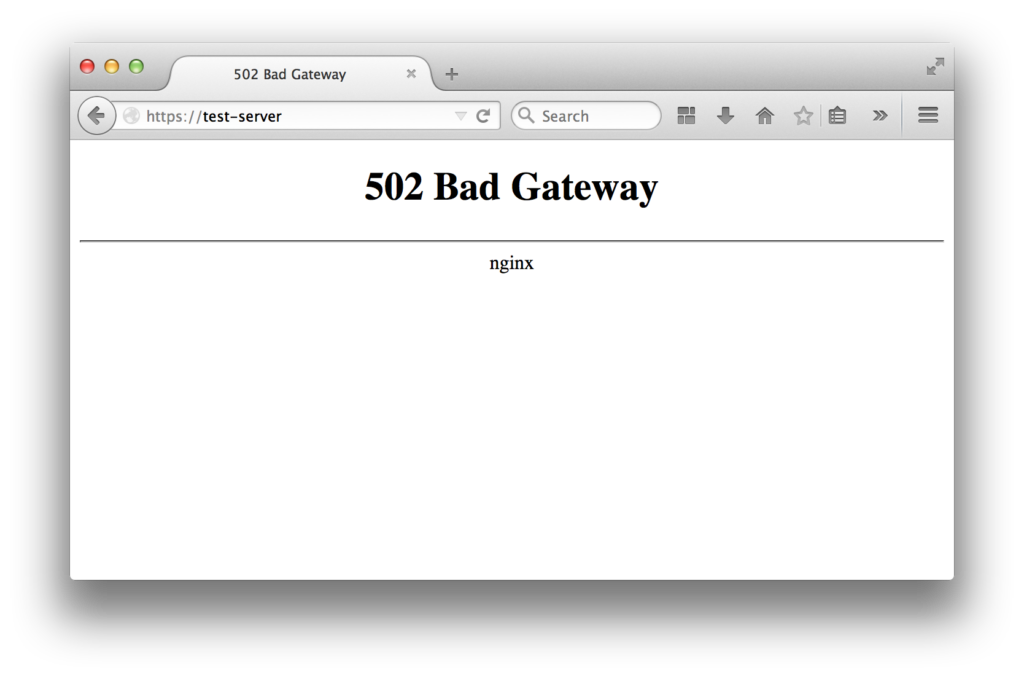

/start, then I should get a 502 Bad Gateway response from nginx.

We chose to represent our testcases in tabular form in our code:

describe 'edge server nginx vhost', :needs_nginx => true do

TEST_CASES = [#frontend?, URL, status, content

[true, '/', 301, 'https://www.gov.uk/'],

[false, '/', 301, 'https://www.gov.uk/'],

[true, '/start', 200, '/start'],

[false, '/start', 502, 'Bad Gateway']]

before(:all) do

@nginx_pid = start_nginx

@https = Net::HTTP.new('localhost',8443)

@https.use_ssl = true

end

after(:all) do

Process.kill("TERM",@nginx_pid)

Process.wait(@nginx_pid)

end

TEST_CASES.each do |frontend_enabled, path, expected_status, expected_content|

specify "#{path} returns #{expected_status} with #{expected_content} with frontend #{frontend_enabled ? 'up' : 'down'}" do

begin

frontend_pid = nil

if frontend_enabled

frontend_pid = start_fake_frontend

end

resp = @https.get(path)

expect(resp.code.to_i).to eq(expected_status)

if resp.is_a? Net::HTTPRedirection

expect(resp['Location']).to eq(expected_content)

else

expect(resp.body).to include(expected_content)

end

ensure

if frontend_pid

Process.kill("KILL",frontend_pid)

Process.wait(frontend_pid)

end

end

end

end

end

Test-driving the change we wanted to see

Now that we had tests around our nginx configuration, we could use them to drive the change that we wanted. The problem we were trying to solve was: if our frontend application server was down, we would serve the default nginx "Bad Gateway" page, resulting in a poor experience for the user.

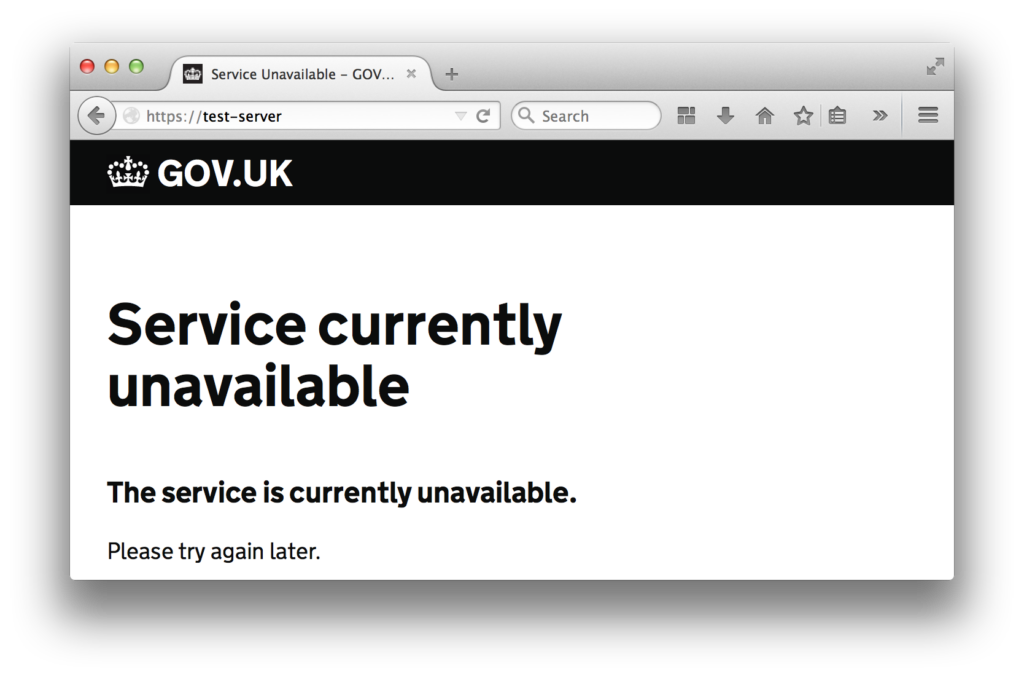

We created a static HTML page to serve instead of the default:

We then modified our tests to assert that we were serving content from this static page, rather than nginx's default behaviour:

TEST_CASES = [#frontend?, URL, status, content

[true, '/', 301, 'https://www.gov.uk/'],

[false, '/', 301, 'https://www.gov.uk/'],

[true, '/start', 200, '/start'],

[false, '/start', 503, 'The service is currently unavailable']]

This test failed, because we had not implemented the functionality we needed. We then updated our nginx configuration to serve up the new page.

Refactoring our nginx configuration

Having created a test harness for nginx and written some tests, we now had the tools to give us confidence to refactor our nginx configuration. We were making extensive use of the if directive, which is particularly difficult to reason about. Previously, we had left this alone because it worked and we were afraid to change it for fear of accidentally breaking something. The tests gave us confidence to tackle some of the complexity of our configuration, allowing us to replace some usages of if with try_files, and remove unused location blocks.

Summary

These tests have given us the confidence to maintain our nginx configuration with the same care that we maintain the rest of our code. We can now drive out new functionality in a red-green-refactor test-driven development cycle, and our suite of tests ensures that regressions in behaviour will be caught before hitting production.

If this sounds like a good place to work, take a look at Working for GDS - we're usually in search of talented people to come and join the team.

You can follow Phil on Twitter, sign up now for email updates from this blog or subscribe to the feed.

2 comments

Comment by Chris Adams posted on

I've found it useful to keep the test cases separate from the webserver setup/teardown logic so you can run them independently when troubleshooting other problems. It's really nice to have a simple command-line utility which can be used to verify any given HTTP server.

Comment by Tom Birmingham posted on

The test produced 502 Bad Gateway and the solution fixes UX for 503 Service Unavailable!? What about the 502? 500 etc?

502 Bad Gateway is a different response to 503 Service Unavailable. Miscommunication to users and search engines is also a bad experience. Lipstick doesn't change words.