On GOV.UK Verify we’ve moved away from manually releasing new versions of our frontend to a continuous deployment model. The frontend is the component that produces the web pages users interact with during their Verify journey.

Our frontend is ‘stateless’. This means any user information needed by the frontend microservice is not kept in an internal datastore. The frontend instances are running as Docker containers using AWS ECS.

Being stateless is good practice for microservices and it lets us scale easily so we can run more than one frontend service at the same time.

We needed to think about how we could introduce frontend changes seamlessly without disrupting the user experience. We also wanted to vary the number of old and new versions of the microservice that were running.

We now manage the frontend with load balancing and zero downtime releases.

Load balancing and zero downtime releases

We currently run multiple instances of the frontend and spread the load between all copies of the application using a load balancer. We run a minimum of 2 instances and this number depends on the load.

When we deploy a new version of the frontend we follow the same 3 step approach every time.

- Take one of the existing instances out of action.

- Upgrade the version of the application running on the instance.

- Put the instance back into service.

Then we do the same for the other ones. This way we are always able to service requests with no interruption for users.

Issues with assets

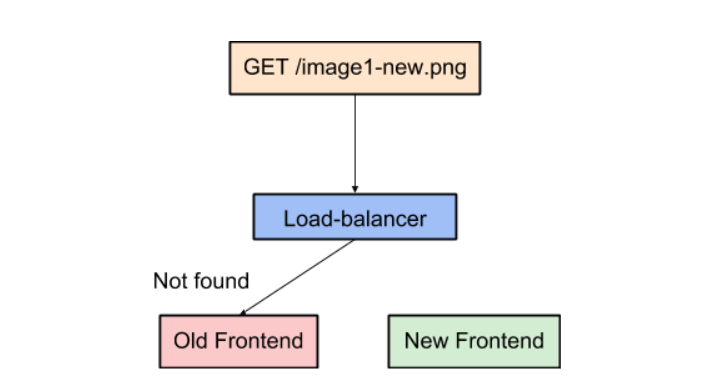

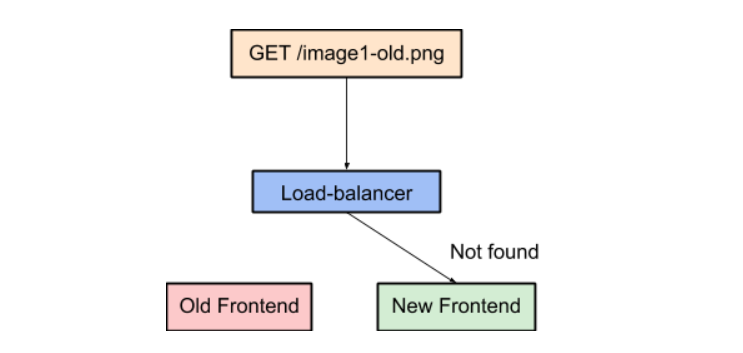

Previously, each time we generated a new frontend release, the filenames of the assets are different. For example, they can take the form of JavaScript, images, or CSS. This led to some issues with our zero downtime release pipeline, especially when we had one new and 2 old frontends running at the same time. Each frontend required assets with differing filenames.

We found this could cause 2 main issues.

Because of the way we use progressive enhancement in our frontend, users can still use the service. But users may end up looking at a page that does not contain the images, styling, or JavaScript we expect the pages to have.

How we fixed the issue with assets being missing for users

We realised the best way to deal with this issue was to make sure that old and new assets were both available and independent of the frontend server and its version. We did this by deploying the new version of the assets to an AWS S3 bucket before starting the upgrade process for the application. By doing this we make sure it does not matter which frontend gets the requests for the assets.

For example, the old frontend release process for 2 instances was:

- frontend-1 new assets

- frontend-2 new assets

- frontend-1 app

- frontend-2 app

The new process is:

- frontend new assets to S3

- frontend-1 app

- frontend-2 app

Previously, we tackled this challenge by deploying the assets first on each server before upgrading the server itself. The current solution adds a little bit of complexity outside the frontend servers themselves as it requires more infrastructure. Given the small amount of assets the frontend requires, we keep the old versions in S3.

Adding immutable assets

Verify developers worked together with the Reliability Engineering team to add immutable assets.

By combining the new method of deploying assets with cache control options for assets served by the frontend, we can now tell browsers the assets are ‘immutable’.

This means the asset served by a specific URL will never change, and the browsers do not need to check for an updated version if they have an existing version in their cache.

By telling the browsers the assets are immutable, pages load faster as newer browsers know they do not need to check if it is current, or request a new copy if they already have a copy of the asset in cache.

Benefits of our approach and what we learned

Changing our deployment model for the assets means that pages load faster for users and also means we can make quicker deployments. We only deploy the assets once rather than for each instance as we did previously.

We learned that running microservices at scale is not just a matter of adding more copies into the load balancer. Running at scale requires planning to make sure users have a seamless experience.

If you have any thoughts on frontend deployments, we’d love to hear from you in the comments section.

2 comments

Comment by dd posted on

"We also wanted to vary number" is missing a word

Comment by khidr posted on

Thanks - I've updated